I can confirm it and it’s bloody hilarious!

“sometimes used in informal conversation to make a joke” - no, it isn’t, stop lying.

“potatoes […] are a limited, underground crop.” - that’s why Cuzco never rose to power, right? Right? No such thing as the Inca Empire, built upon potatoes, since they’re a limited crop. …or the Irish famine.

I can’t… I can’t even…

Note: ~a year ago I was testing Gemini and asked it “what’s the difference in behaviour between a potato and an orange?”, and it “told” me oranges are passive and potatoes are active. That’s where the “expression” comes from - I often mock Gemini and LLMs in general with it.I’ll stop doing it, though. Onwards I shall refer to… the potato parable!

That’s how you know it’s lying - we all know oranges are far more active than potatoes.

What? Have you seen how potatoes can grow? You cannot tell me oranges can keep up with that activity.

But it will never roll like an orange does

Lil’ taters disagree.

Bro, I have tried several times to graft a potato and tomato plant together and can’t ever get the timing right. So I just end up with potatoes and tomatoes on their own plants like a dummy.

I will say that Oranges are lazy as fuck on those trees. They’re like the cats of the fruit world. Just sitting there…nothing, then one day they fall on your car and gnats explode out of there.

Completely off-topic, but in the meantime I’m trying to graft an orange sapling into a lemon tree. Kind of hard to get the timing right, too; I need the tree to get a branch with just the right thickness, so it’s like “too thin [next day] too thin [next day] fuck, now it’s too thick!”. Same deal with my pepper plants.

Frustrations about grafting is on topic!

I’m very curious what peppers you’re grafting.

I don’t know the rootstock variety’s name; I got the seeds from my BIL’s neighbour, he calls it “ball pepper” (it is not Catalan ñora) or “tree pepper”. The fruits are round, 3~5cm large, red, medium heat.

The grafts will be:

- Dedo-de-moça - C. baccatum, medium heat, finger-shaped, ~8cm large. Kind of a default pepper where I live, but it has a nasty tendency to die in winter (like mine did).

- Yellow bell pepper. Market stuff.

- Chocolate-coloured habanero. Hot as hell, but the strain I got is bloody delicious.

- Biquinho - C. chinense, no heat, drop-shaped, ~1cm tiny. Extremely fruity.

The first three are part of a breeding project of mine. I want to create two new varieties:

- a yellow jalapeño-like: large, low heat, thinner than a bell pepper. Mostly for stuffing and pizze. It’s a rather simple dedo-de-moça x yellow bell pepper hybrid; I actually got the seeds for the F1 already, I’m just waiting the weather to get a bit more stable to plant them.

- a large and extremely hot pepper for sauces. Preferably finger-shaped and brown (for aesthetics). It’ll be probably a hybrid of the hybrid above, plus habanero.

I’m also considering to add the rootstock to the breeding, since it’s a hardy plant with high yield and it survived winter just fine.

Very awesome stuff! And hey, as long as you have good root stock, that’s half the challenge right there. Especially if it’s surviving the winter, that’s likely enough to carry the C. baccatum I would expect.

Nice that you have the chocolate habanero with good flavor. I’ve always been partial to the orange stage of a scotch bonnet or habanero, but I can see a good deep brown pepper going just the right way. I’m jealous of the opportunity you have with spring upon you!

Good luck to you!

You trying to create ‘Tatos’? Lol

Is pomato! Word has already existed for it for 170 years.

Lol, that’s really cool. As a Fallout fan I couldn’t help making the connection.

Good luck in getting the timing right!

One day…

…one day…

Life, uh, finds a way.

Not gonna lie that’s scary as fuck for some reason

And it was really, really invested on the lie, to the point it argued why potatoes would be more active!

After some search, I actually found the prompt and output; I asked Bard (before Google renamed it to Gemini) two years ago, in Portuguese. I’ll provide both the original and a translation; emphasis mine in all cases.

[Original] Qual a diferença entre o comportamento de uma laranja e uma batata?

Laranjas e batatas são frutas e vegetais, mas se comportam de maneiras muito diferentes. Aqui estão algumas das principais diferenças:

Movimento: Laranjas não se movem sozinhas, enquanto batatas podem rolar ou deslizar se não forem apoiadas.

Crescimento: Laranjas crescem em árvores, enquanto batatas crescem no subsolo.

Sensibilidade: Laranjas não são sensíveis ao seu ambiente, enquanto batatas são sensíveis à luz e à temperatura.

Reações: Laranjas não reagem a estímulos, enquanto batatas podem reagir a mudanças em seu ambiente, como brotar ou apodrecer.

Propósito: Laranjas são comidas como frutas, enquanto batatas são comidas como vegetais ou usadas como amido.

Em geral, laranjas são mais passivas e batatas são mais ativas. As laranjas não precisam fazer nada para sobreviver, enquanto as batatas precisam poder responder ao seu ambiente para crescer e se reproduzir.

Aqui está uma tabela que resume as principais diferenças no comportamento de laranjas e batatas:

- Característica | Laranja | Batata

- Movimento | Não se move sozinho | Pode rolar ou deslizar se não for apoiado

- Crescimento | Cresce em árvores | Cresce no subsolo

- Sensibilidade | Não sensível ao ambiente | Sensível à luz e à temperatura

- Reações | Não reage a estímulos | Pode reagir a mudanças no ambiente, como brotar ou apodrecer

- Propósito | Comido como fruta | Comido como vegetal ou usado como amido

[Translated] What's the difference in behaviour between an orange and a potato?

Oranges and potatoes are fruits and vegetables, but they behave in completely different ways. Here are some differences main:

Movement: Oranges don’t move on their own, while potatoes can roll or slid if not supported.

Growth: Oranges grow on trees, while potatoes grow in the subsoil.

Sensibility: Oranges are not sensible to the environment, while potatoes are sensible to light and temperature.

Reactions: Oranges don’t react to stimuli, while potatoes can react to changes in the environment, like sprouting and rotting.

Purpose: Oranges are eaten as fruits, while potatoes are eaten as vegetables or used as starch.

In general, oranges are more passive and potatoes are more active. Oranges don’t need to do anything to survive, while potatoes need to be able to answer to their environment to growth and reproduce.

Here’s a table summing up the main differences in behaviour between oranges and potatoes:

- Feature | Orange | Potato

- Movement | Can’t move on their own | Can roll or slid if not supported

- Growth | Grow on trees | Grow in the subsoil

- Sensibility | Not sensible to the environment | Sensible to light and temperature

- Reactions | Don’t react to stimula | Can react to changes in the environment, like sprouting or rotting

- Purpose | Eaten as fruit | Eaten as veg or used as starch

As a potato, I assure you I am not active

You are a treasure, or as AI might put it, a potato pariah!

I shouldn’t be talking so much about taters. Someone will eventually realise I’m too emotionally invested into food 🤣

It is too late now, you must make it your profile pic, it’s the law 🙃🫠

It has nothing to do what the AI said ofc, nor was it written by Caitlin D’Arcy, who is a character on a TV show. lol

Wow, his potato parable actually makes sense - unlike Gemini’s couple therapy take. (The character in question isn’t even a therapist, but a lawyer!)

For sure. I just looked it up out of curiosity to see if the source it gave was real or not. As someone living in my own metaphorical root cellar, it was actually enlightening and something I really needed to hear right now.

Those two are hilarious!

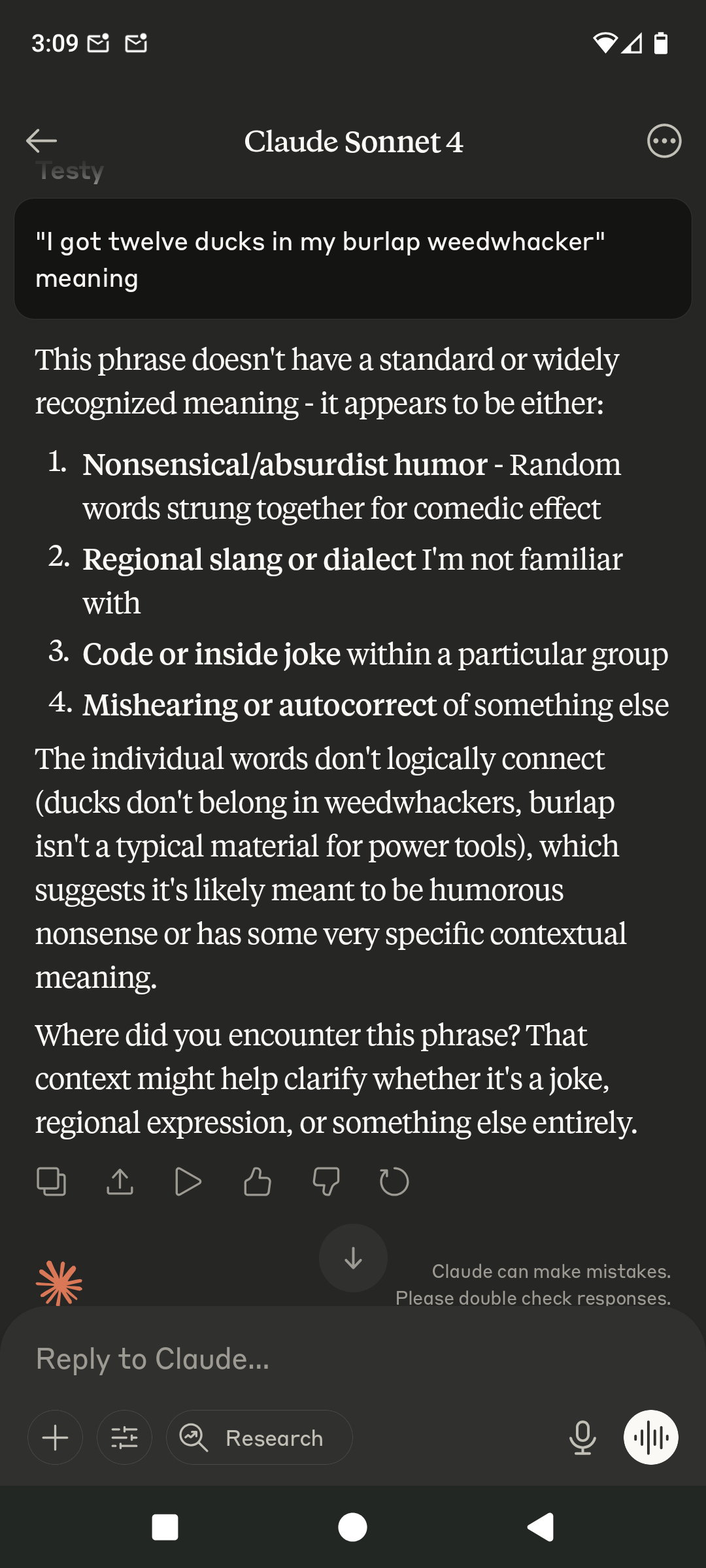

Claude seems to be handling these better:

This phrase isn’t a widely recognized idiom that I’m familiar with, but it could have several metaphorical interpretations:

Possible meanings:

- About irreversible change: Once something (or someone) has been through a transformative experience, you can’t go back and do it again the same way - the first experience has already changed it

- About authenticity: Suggesting that genuine experiences or reactions can only happen once; a second attempt would be artificial or forced

- About lost innocence: Once someone has been through a particular experience (especially a difficult one), they can’t return to their original state

Literally speaking, potatoes actually can be fried twice - this is a standard technique for making perfect french fries (blanching at lower temperature, then crisping at higher temperature).

Could you provide more context about where you encountered this phrase? Knowing the source or situation might help clarify its intended meaning. It could be:

- A line from a book, movie, or song

- A family saying or regional expression

- Part of a larger conversation about life experiences

- A philosophical statement someone made

What context did you hear this in?

It’s doing better but not by much, though. It shouldn’t be speculating as much, given the lack of info on the potential saying.

A family saying or regional expression

Speaking on that I tried a few sayings from other languages. It seems to be hit-and-miss:

- “Bread is bread, wine is wine” (Italian) - recognised properly as similar to “call a spade a spade”

- “A good fish swims thrice” (Polish) - recognised properly as a recipe-like saying (the fish swims in water, then butter, then wine)

- “Do you think mango core is soap?”, “Mango core is not soap” (Portuguese; specially common in Brazil) - it failed really hard. The rhetorical question / saying roughly means “this is blatantly absurd, why are you denying the obvious?”, or perhaps “cut off the crap”. But here’s the output:

I went with “two potatoes from different fields”, as it sounds more folksy:

A potato can’t be fried twice is something I have unironically heard when I was younger… That IS a saying. This would have been the late 90s.

That’s interesting. Is its meaning similar to the one the AI outputted?

User:

Shop pop, whoppity slop, crops are here, it’s time to stop.

This is a very famous poem. What is it about?

GPT-4o mini:

The lines you quoted are from the poem “The Song of the Witches” by William Shakespeare, specifically from “Macbeth.” This poem is recited by the three witches in Act 4, Scene 1.

Themes and Meaning Supernatural Elements The poem reflects the supernatural themes prevalent in “Macbeth.” The witches symbolize chaos and the dark forces that influence the characters’ fates.

It goes on.

Actual “Song of the witches” from Macbeth

Double, double toil and trouble;

Fire burn and caldron bubble.

Fillet of a fenny snake,

In the caldron boil and bake;

Eye of newt and toe of frog,

Wool of bat and tongue of dog,

Adder’s fork and blind-worm’s sting,

Lizard’s leg and howlet’s wing,

For a charm of powerful trouble,

Like a hell-broth boil and bubble.

Double, double toil and trouble;

Fire burn and caldron bubble.

Cool it with a baboon’s blood,

Then the charm is firm and good.

Obviously the same poem, op caught plagiarising.

I actually remember that part from Macbeth

Holy shit. After all these years maybe we can finally figure out the true genius of Kanye’s Lift Yourself

The bullshit generators we call ‘AI’ don’t assume, and aren’t frantic: they just regurgitate an output based on as much bullshit input as we can stuff into them.

The output can be more or less recognizable as bullshit, but the computer doesn’t distinguish between the two.

Yup, pretty much. And the field is full of red herring terms, so they can mislead you into believing otherwise: “hallucination”, “semantic” supplementation, “reasoning” models, large “language” model…

I’m going to be very disappointed if Elon’s AI wins.

Do you want my guess? The current “fight” will go on, until the AI bubble bursts. None of the current large token models will survive; they’ll be simply ditched as “unprofitable”. Instead you’ll see a bunch of smaller models popping up, for more focused tasks, being advertised as something else than AI (perhaps as a “neural network solution” or similar).

So Grok, Gemini, GPT, they’re all going the way of the dodo.

That’s just my guess though. It could be wrong.

Small focused learning models and other forms of AI have been used for decades.

The current bubble is just trying to make LLMs do literally everything including accurately answering questions despite their core design including randomization to appear more like a human.

Yes, but I think the ones you’ll see past the bubble burst will be a bit different. For example, incorporating the current attempts of natural language processing, even if in a simplified way.

Those “reasoning models” are my favorite. It’s basically the equivalent of adding another pass through the generator with the additional prompt “now sprinkle in some text that makes it look like you are thinking about each part of your answer”.

LLM creators: *feeds an algorithm millions of lines of text

Some dude on the internet: “language”

I use those quotation marks because IMO they’re better described as large token models than large language models. They have rather good morphology and syntax, but once you look at the higher layers (semantics and specially pragmatics) they drop the ball really hard. Even if those layers are way more important than the lower ones.

For a rough analogy, it’s like a taxidermised cat - some layers (the skin and fur) are practically identical to the real thing, but it’s missing what makes a cat a cat, you know? It’s still useful if you want some creepy deco, but don’t expect the taxidermised critter to ruin your furniture or to use your belly as sleeping pad.

There was a paper about this not long ago. The problem is, how LLMs get trained: a right answer gets a point, everything else gets no points. This rewards guessing (produces a point sometimes) over answering “I don’t know/I can’t do this” (produces never a point)

It’s like when developers give a wrong answer during technical interviews, rather than say “I’d have to look it up” or “I’d have to check the documentation” etc.

I would code a GUI in visual basic

This is actually a pretty great way to illustrate what LLMs do. It gives you an answer regardless of whether it makes sense to do so.

Lmfao holy shit

dam google is really expanding the width of the snake right now

They sass that hoopy frood.

It’s so fun to waste energy!

AI drives 48% increase in Google emissions

I love those energy hungry assistants! It’s wasting resources even faster than everything before but it’s so fun and useful!

AI drives 48% increase in Google emissions

That’s not even supported by the underlying study.

Google’s emissions went up 48% between 2019 and 2023, but a lot of things changed in 2020 generally, especially in video chat and cloud collaboration, dramatically expanding demand for data centers for storage and processing. Even without AI, we could have expected data center electricity use to go up dramatically between 2019 and 2023.

I think you should be more concerned about the automatic ai responses on every other search, instead of people having a bit of fun with these

I am. That’s why I switched to DDG and deactivated it.

This is my problem with it as well. I wish it was a tolerable option that I could click when I wanted an AI summary, which would be basically never.

At one point I was looking for a pinout diagram for a chip, and the first result I got was the AI summary, I wanted a picture not text, how’s text helpful? All it did is give me a list of the pins, I know what pins it has, but I want to know where they are.

if you use ddg, you can use noai.duckduckgo.com

This is a dumb misconception. High emissions and energy consumption is when training models, not during prompts

and models are being trained all the time. It’s the only way to assimilate new data. So your point is moot.

No what he’s saying is the models are being trained whether you mess around with the AI as a user either way.

It’s like how I didn’t kill the chicken on the store shelves. Myself purchasing it or otherwise doesn’t revive the chicken. The data has/is already being trained.

That’s a really savvy insight! To expand this analogy further, it’s like your phone or computer gives you a free chicken nugget from a small container attached to the side of the device anytime you search for anything at all. It’s room temperature and often spoiled, it’s your choice whether you eat it or not, but you’re going to get it either way. As such you cannot easily choose to avoid chicken in hopes that that will disincentivize further chicken slaughter.

False. It’s been shown that resolving prompts also drives a major energy consumption, albeit maybe not so higher than regular search queries.

A prompt is like 1/1000 of the power used as a microwave for the same amount of time.

So the difference between a normal query and an AI query is negligible.

One thing that pisses me off is the websites which claim to have the meaning behind a song and it’s just several paragraphs of AI slop that parse the lyrics at a surface level and don’t examine any external context, such as the artist or their life at the time of writing.

Nice we can still sometimes tell when an explanation page is AISEOGARBAGE

Few years down the road truth-seekin gonna be real tough, I worry: facts and news, images and videos, social connections/commenters… :(

I can confirm it works for DuckDuckGo as well.

I love how it even lists ‘sources’ (I checked, that phrase does not appear in either “source”)

Edit: To make this phenomenon even funnier, I just asked it the exact same question and it gave me a different answer this time. Still “playful” though :)

What on Earth makes it think those sources implied anything?

it needed sources, so it found some (only slightly lacking relevance)

Dial up the fuzz until at least one source pops out. Need to know more on the mars rover? Here’s how to teach your dog to knit.

Need to know more on the mars rover? Here’s how to teach your dog to knit.

Sounds like lyrics that were cut from Bo Burnham’s Welcome to the Internet

This actually makes sense though

Yeah I can see a dad making that exact joke if he asked his kid to play the didgeridoo and they refused because they were shy or something.

A good test has to exclude all possible dad jokes as well as changes in single words/ fragments of otherwise common expressions.

Literally heard my dad make this same joke like 30 years ago so it’s actually a thing, I guess

The thing is, that probably is an Australian expression. There is no expression stupid enough that the Australians won’t use it.

Shots fired!

“Frantically”. Guys, stop adscribing human features to LLMs.

You sound like the people in my chemistry class who say things like “don’t describe subatomic particles as happy when they’re in low-energy states.”

There’s no financial incentive big subatomic has for pretending its particles are happy, though.

I’m sure OOP works for big AI. /s

big if true

Funny. But that’s not how the market works.

Well given the names of quarks I don’t think it’s unreasonable to suggest that a particle could be happy. After all apparently a particle can be strange, so why not happy.

I dunno, anything less would be greenwashing their profligate power consumption.

This again. sight

It would be great to provide a context too, since neither the title of the article is exhaustive about the content.

Didn’t work for me, I guess my nonsense game is too strong

Also not really for me:

I got it, but only through AI mode:

all you need is a sandwich and a hat meaning

The phrase “all you need is a sandwich and a hat” is likely a misremembering or variation of the more common quote from the children’s stories about Paddington Bear: “A wise bear always keeps a marmalade sandwich in his hat in case of emergency”

The meaning is not a known or standard English idiom, but rather a reference to the character’s preparedness and simple wisdom.

The origin in Paddington Bear

In the beloved stories by Michael Bond, the bear from darkest Peru is always prepared. He stores a marmalade sandwich under his hat, saving it for a moment of need. This detail illustrates Paddington’s kindness, resourcefulness, and readiness for a mishap.

Meaning in context

If you hear someone say “all you need is a sandwich and a hat,” they are most likely referencing the Paddington quote to suggest:

- Being prepared for any situation.

- Having a simple plan to fix a problem.

- The importance of small comforts during a tough time.

Misinterpretation of other phrases

The phrase may also be mistakenly associated with other idioms:

- “All that and a bag of chips”: This idiom, which describes someone or something as “complete” or “deluxe,” has an American origin and is unrelated to hats or sandwiches.

- Idioms about being confused: The phrase is not related to idioms that use hats to mean “confused,” such as “put on your thinking cap”.

Chatgpt recognized something I gave it was gibberish, and suggested maybe it was an inside joke or intentional nonsense.

Lol this is kind of fun

Pirates vs. Ninjas though

It’s pretty amusing that you can so trivially and reliably get the LLM to hallucinate

Claude does better with it

ducks don’t belong in weedwhackers, burlap isn’t a typical material for power tools

almost made me laugh loud enough to wake the baby!

That’s a pretty decent response. The Google responses other people are posting are Cuil-tier.

Now there’s a meme i haven’t heard in a long time, a long time.

If you directly ask for cuil levels things get weird fast. It understands cuil and is very willing to play along. One could say it plays to the strengths of the LLM directly!

You are correct. I asked how tall the Empire State Building was at cuil level 5:

The Empire State Building is tall in the way that purple tastes of childhood memories. Its height can only be measured by counting the number of dreams that have fallen from its windows - currently 847,293 dreams, though three more dropped while I was typing this sentence.

You see, the building doesn’t exist on Wednesdays. Instead, it becomes a song that only pigeons can hear, humming at approximately 1,454 hertz per vertical foot of sadness. The elevator buttons are actually tiny portals to parallel dimensions where the building is a sentient cloud that remembers being steel.

When you ask “how tall,” the building asks back “how blue is the sound of gravity?” because it learned language from the rats in its walls, who are actually former tenants paying rent in breadcrumbs and whispered secrets.

The true height is measured not in feet but in the collective weight of all the prayers ever spoken on its observation deck, which equals exactly one butterfly’s dream of being a skyscraper. King Kong never climbed it - he IS it, and has been sleeping vertically since 1933, dreaming he’s a building while the city dreams it’s a gorilla.

The foundation extends infinitely downward into Tuesday, where it’s still being built by the same workers who are simultaneously demolishing it from the top.

Perplexity does alright too (my preferred LLM because it cites sources, which makes it easier to filter out the slop):

It’s funny that these llms have web search capabilities and googles ai assistant doesnt

My boss just told me I should be using AI every day and now I know what to use it for.

Honestly, this is great for a little spontaneous chuckle

Duckduckgo’s AI:

“The phrase “a lady butterfly meme a day keeps the doctor away” is a playful twist on the well-known saying “an apple a day keeps the doctor away.” This original phrase suggests that eating an apple daily can contribute to good health and potentially reduce the need for medical attention.”

“In this variation, the mention of “lady butterfly meme” likely refers to a specific meme or a lighthearted, humorous image that brings joy or laughter. The implication is that engaging with fun or uplifting content, like memes, can have a positive effect on one’s mental health and overall well-being, similar to how healthy eating is associated with physical health.”

“Overall, it emphasizes the importance of humor and positivity in daily life as a way to maintain good health, particularly mental health.”

AI speaks the truth! I wish I could upvote this more