- cross-posted to:

- firefox@fedia.io

- cross-posted to:

- firefox@fedia.io

“It’s safe to say that the people who volunteered to “shape” the initiative want it dead and buried. Of the 52 responses at the time of writing, all rejected the idea and asked Mozilla to stop shoving AI features into Firefox.”

Hear me out.

This could actually be cool:

If I could, say, mash in “get rid of the junk in this page” or “turn the page this color” or “navigate this form for me”

If it could block SEO and AI slop from search/pages, including images.

If I can pick my own API (including local) and sampling parameters

If it doesn’t preload any model in RAM.

…That’d be neat.

What I don’t want is a chatbot or summarizer or deep researcher because there are 7000 bajillion of those, and there is literally no advantage to FF baking it in like every other service on the planet.

And… Honestly, PCs are not ready for local LLMs. Not even the most exotic experimental quantization of Qwen3 30B is ‘good enough’ to be reliable for the average person, and it still takes too much CPU/RAM. And whatever Mozilla ships would be way worse.

That could change with a good bitnet model, but no one with money has pursued it yet.

That would be awesome. Like a greasemonkey/advanced unlock for those of us who don’t know how to code. So many times I wanted to customise a website but I don’t know how or it’s not worth the effort.

But only of it was local, and specially on mobile, where I need the most, it will be impossible for years…

I mean, you can run small models on mobile now, but they’re mostly good as a cog in an automation pipeline, not at (say) interpreting english instructions on how to alter a webpage.

…Honestly, open weight model APIs for single-off calls like this are not a bad stopgap. It costs basically nothing, you can use any provider you want, its power efficient, and if you’re on the web, you have internet.

You mean to use online LLM?

No. That’s what I don’t want. If it was a company I trusted I would, but good luck with that. Mozilla is not that company anymore, even if they had the resources to host their own.

But locally or in a server I trust? That would be awesome. AI is awesome, but not the people who runs it.

I mean, there are literally hundreds of API providers. I’d probably pick Cerebras, but you can take your pick from any jurisdiction and any privacy policy.

I guess you could rent an on-demand cloud instance yourself too, that spins down when you aren’t using it.

The auto-translation LLM runs locally and works fine. Not quite as good as deepl but perfectly competent. That’s the one “AI” feature which is largely uncontroversial because it’s actually useful, unobtrusive, and privacy-enhancing.

Local LLMs (and related transformer-based models) can work, they just need a narrow focus. Unfortunately they’re not getting much love because cloud chatbots can generate a lot of incoherent bullshit really quickly and that’s a party trick that’s got all the CEOs creaming their pants at the ungrounded fantasy of being just another trillion dollars away from AGI.

Yeah that’s really awesome.

…But it’s also something the anti-AI crowd would hate once they realize it’s an 'LLM" doing the translation, which is a large part of FF’s userbase. The well has been poisoned by said CEOs.

I don’t think that’s really fair. There are cranky contradictarians everywhere, but in my experience that feature has been well received even in the AI-skeptic tech circles that are well educated on the matter.

Besides, the technical “concerns” are only the tip of the iceberg. The reality is that people complaining about AI often fall back to those concerns because they can’t articulate how most AI fucking sucks to use. It’s an eldtritch version of clippy. It’s inhuman and creepy in an uncanny valley kind of way, half the time it doesn’t even fucking work right and even if it does it’s less efficient than having a competent person (usually me) do the work.

Auto translation or live transcription tools are narrowly-focused tools that just work, don’t get in the way, and don’t try to get me to talk to them like they are a person. Who cares whether it’s an LLM. What matters is that it’s a completely different vibe. It’s useful, out of my way when I don’t need it, and isn’t pretending to have a first name. That’s what I want from my computer. And I haven’t seen significant backlash to that sentiment even in very left-wing tech circles.

You know what would be really cool? If I could just ask AI to turn off the AI in my browser. Now that would be cool.

I can’t fucking believe I’m agreeing with a .world chud.

Well fortunately for you, I don’t know what that means.

Your server has not a monopoly on, but a majority of the worst shitlibs and other chuds. To the point I’m genuinely surprised by agreeing with someone there, and am worried that when i examine it closely youll be agreeing with me for some unthinkably horrible reason.

The problem is I fundamentally do not understand how Lemmy works, so I just picked what seemed obvious. Like why wouldn’t I want the world.

Also I thought from just reading sub-Lemmies? that .ml was the crap hole.

Also, I looked up Chud and that’s really mean.

Youre on the shitlib chud server; shit happens.

I would say that while there are general rules of thumb, it’s generally good to never assume the intentions or beliefs of another user based solely on their home server. There are nice people all over, and there are also a lot of assholes all over.

By the way, as to your question mark, they are just called “Communities” on Lemmy typically, though I think some instances call them something different occasionally.

You can do this now:

Hardest part is hosting open-webui because AFAIK it only ships as a docker image.

Edit: s/openai/open-webui

Open WebUI isn’t very ‘open’ and kinda problematic last I saw. Same with ollama; you should absolutely avoid either.

…And actually, why is open web ui even needed? For an embeddings model or something? All the browser should need is an openai compatible endpoint.

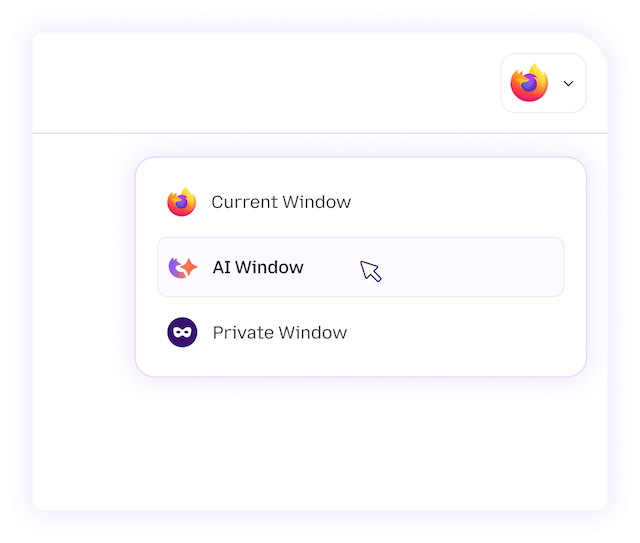

The firefox AI sidebar embeds an external open-webui. It doesn’t roll its own ui for chat. Everything with AI is done in the quickest laziest way.

What exactly isn’t very open about open-webui or ollama? Are there some binary blobs or weird copyright licensing? What alternatives are you suggesting?

https://old.reddit.com/r/opensource/comments/1kfhkal/open_webui_is_no_longer_open_source/

https://old.reddit.com/r/LocalLLaMA/comments/1mncrqp/ollama/

Basically, they’re both using their popularity to push proprietary bits, which their devleopment is shifting to. They’re enshittifying.

In addition, ollama is just a demanding leech on llama.cpp that contributes nothing back, while hiding the connection to the underlying library at every opportunity. They do scummy things like.

Rename models for SEO, like “Deepseek R1” which is really the 7b distill.

It has really bad default settings (like a 2K default context limit, and default imatrix free quants) which give local LLM runners bad impressions of the whole ecosystem.

They mess with chat templates, and on top of that, create other bugs that don’t exist in base llama.cpp

Sometimes, they lag behind GGUF support.

And other times, they make thier own sloppy implementations for ‘day 1’ support of trending models. They often work poorly; the support’s just there for SEO. But this also leads to some public GGUFs not working with the underlying llama.cpp library, or working inexplicably bad, polluting the issue tracker of llama.cpp.

I could go on and on with examples of their drama, but needless to say most everyone in localllama hates them. The base llama.cpp maintainers hate them, and they’re nice devs.

You should use llama.cpp llama-server as an API endpoint. Or, alternatively the ik_llama.cpp fork, kobold.cpp, or croco.cpp. Or TabbyAPI as an ‘alternate’ GPU focused quantized runtime. Or SGLang if you just batch small models. Llamacpp-python, LMStudo; literally anything but ollama.

As for the UI, thats a muddier answer and totally depends what you use LLMs for. I use mikupad for its ‘raw’ notebook mode and logit displays, but there are many options. Llama.cpp has a pretty nice built in one now.