I have no plans to support p92 precisely because it’s going to “push” users together as a commodity. What Meta has jurisdiction over is not its communities but rows of data - in the same way that Reddit’s admins have conflicted with its mods, it is inherently not organized in such a way that it can properly represent any specific community or their actions.

So the cost-benefit from the side of extant fedi is very poor: it won’t operate in a standard way, because it can’t, and the quality of each additional user won’t be particularly worth the pain - most of them will just be confused by being presented with a new space, and if the nature of it is hidden from them it will become an endless misunderstanding.

If a community using a siloed platform wants to federate, that should be a self-determined thing and they should front the effort to remain on a similar footing to other federated communities. The idea that either side here inherently wants to connect and just “needs a helping hand” is just wrong.

An overview of PPQN:

http://midi.teragonaudio.com/tech/midifile/ppqn.htm

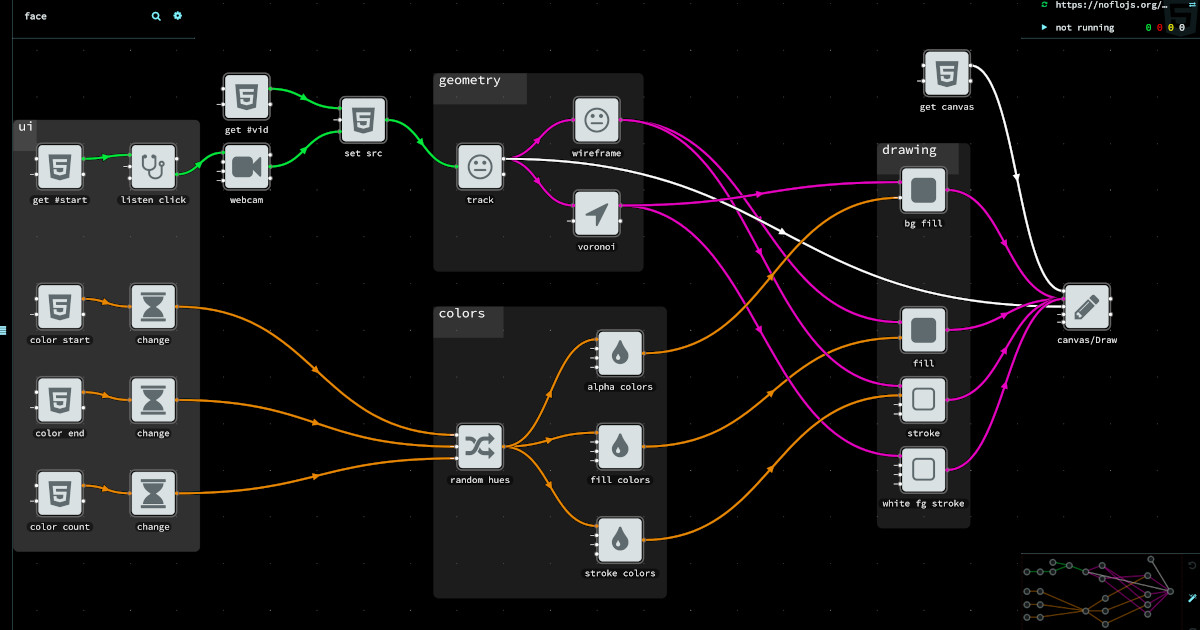

Some reference code I’ve used, which might help with architectural issues: https://github.com/triplefox/minimidi/tree/master/minimidi